NHTSA upgrades Autopilot investigation affecting 800k Teslas to ‘Engineering Analysis’

By Gabe Rodriguez Morrison

Earlier this yr, The Countrywide Freeway Transportation Safety Administration (NHTSA) launched an investigation into Tesla’s “phantom braking” situation, which occurs when the vehicle slows down abruptly.

In February, the NHTSA said that “the rapid deceleration can take place with no warning, at random, and often regularly in a one drive cycle.” The difficulty is especially concerning on highways where by sudden braking could result in a rear-conclude collision.

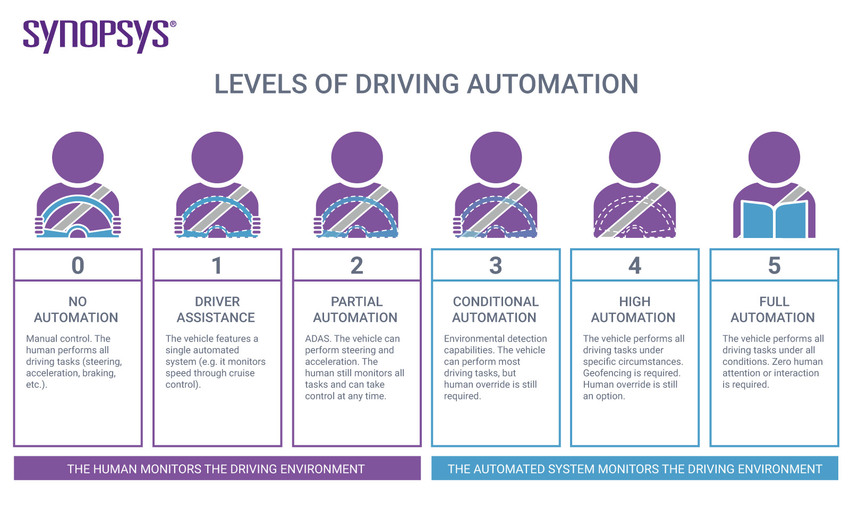

Considering that then, the NHTSA has formally upgraded its preliminary investigation to an “Engineering Examination,” which is conducted right before the agency decides a remember. Regulators will evaluate 830,000 vehicles right after various of the automaker’s vehicles collided with stopped unexpected emergency automobiles when autopilot was engaged.

Tesla’s autopilot function is built to help drivers navigate streets working with a mix of cameras and synthetic intelligence to detect other autos, pedestrians, quit lights, and far more. Tesla instructs drivers to pay notice to the road and hold their fingers on the steering wheel when employing Autopilot.

Over the past 4 yrs, 16 Autopilot-engaged Teslas have crashed into parked 1st-responder automobiles, resulting in 15 accidents and one particular loss of life. According to forensic information, most drivers experienced their fingers on the steering wheel ahead of effects, in compliance with Tesla’s guidance.

In this investigation, regulators will ascertain irrespective of whether or not Tesla’s Autopilot aspect undermines “the performance of driver’s supervision.”

“The investigation will evaluate the systems and strategies utilized to monitor, assist, and enforce the driver’s engagement with the dynamic driving task during Autopilot procedure,” the NHTSA explained.

This investigation affects about 830,000 Tesla vehicles which had been constructed after 2014. Tesla introduced its Autopilot aspect that 12 months and later transitioned to its have Autopilot hardware in 2016 following ending its partnership with MobileEye.

Tesla has yet to respond to the escalated investigation into its autopilot element.

By Alex Jones

Lions, Tigers, and Bears, Oh My! Whilst Dorothy’s list of animals are not bundled in Elon Musk’s most recent tweet concerning animal recognition by Tesla’s Neural Community, dogs, cats, and horses are included.

Talking to Tesla’s item recognition skills, it seems that Tesla is getting ready to launch an update that contains new visualizations of many animals that may stray across the car’s route.

As far as animal recognition, Teslas at this time only visualize puppies, but it will normally exhibit a canine even if it is an additional animal.

This is due to a deficiency of education, exactly where the automobile are not able to still differentiate concerning a puppy, a horse or a cat. It can be effortlessly fastened by schooling their NN by including and categorizing photos of addition animals.

It appears to be like we are going to shortly get new animal visualizations. The most clear could be animals that are often identified close to streets, this kind of as deer, horses, possums or other creatures.

While automotive competitors have produced gains in establishing electric powered vehicles soon after several years of lagging driving Tesla, just one are not able to deny that Tesla’s neural networks are chopping edge.

These neural networks give Tesla the means to collect live knowledge from in excess of a person million taking part motor vehicles. They employ these huge datasets of real-earth information to prepare their AI algorithms to discover objects that could be a hazard to motorists.

Tesla statements that “a full build of Autopilot neural networks involves 48 networks that just take 70,000 GPU hrs to prepare.”

Even though people have seen drastic enhancements in the car’s ability to figure out numerous automobile styles (as found by enhanced visualizations in 2022.16), the likely addition of new animals is an intriguing addition.

With FSD Beta 10.12, Tesla extra various visualization updates. Will far more animals be added in FSD Beta 10.13, which is anticipated in about two months?

Although as well early to tell, it remains exciting that the objection recognition skills of Teslas carry on to improve.

The vehicle appreciates that some thing is there, just does not know that they are horses still, but it will. Canines, cats and many other animals will also be acknowledged.

— Elon Musk (@elonmusk) April 29, 2022

By Gabe Rodriguez Morrison

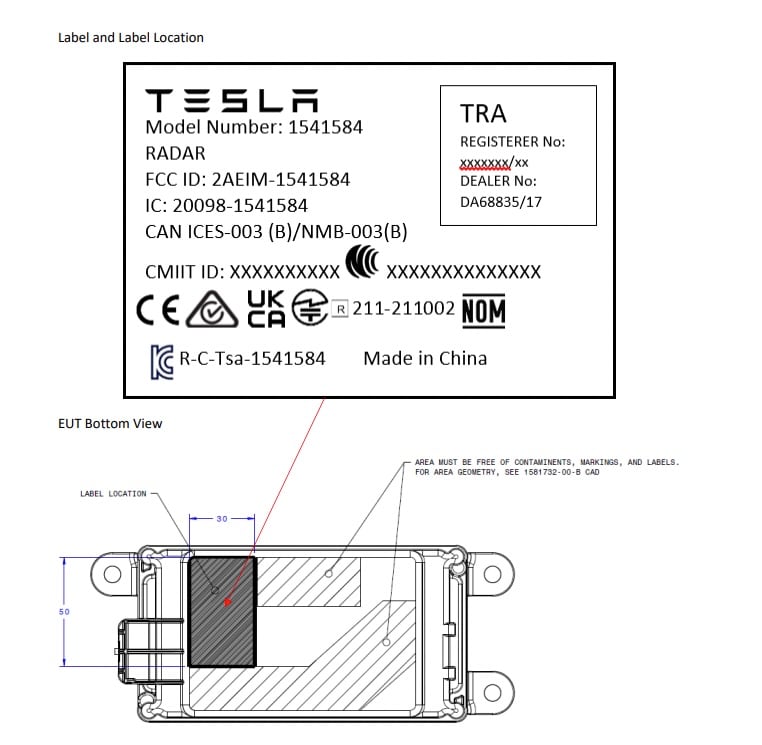

Tesla registered a new substantial resolution radar unit with the U.S. Federal Communications Fee (FCC). Tesla’s supposed use for these radar models is unidentified, but it seems they are supposed for imaging, very similar to how LiDAR employs lasers to map environment.

Even though Tesla’s FSD software program has used radar in the earlier, Elon Musk has a rather unfavorable stance on LiDAR. For the purposes of autonomous driving, Musk sees LiDARs as a “fool’s errand.” Yet, persons have been recognizing Tesla prototypes with LiDAR sensors because previous yr.

The most current instance of Tesla utilizing LiDAR will come from Twitter user @ManuelRToronto who noticed a LiDAR-mounted Tesla in downtown Toronto, only a few months just after the release of FSD Beta in Canada.

He took a video clip of the LiDAR-mounted Design Y with maker license plates from California.

@elonmusk Noticed in Toronto. A @Tesla Design Y with #LiDAR and CA Producing (MFG) Plates. It slash me off!, I guess it doesn’t have FSD or it may perhaps be digital camera shy, what is the deal ? @NotATeslaApp pic.twitter.com/FYOdjFA1gq

— Manuel Rodriguez (@ManuelRToronto) May 16, 2022

There has been no official announcement from Tesla about these LiDAR-mounted vehicles, but it is protected to think that Tesla will not use LiDAR on any production automobiles.

Tesla is possible utilizing LiDAR to aid educate their machine studying algorithms, utilizing it as the floor fact when examining for precision. As opposed to cameras, LiDAR captures extremely accurate 3D depth measurements.

LiDAR could be made use of to train these algorithms to correctly interpret depth by relying on specific, non-interpretive LiDAR sensors.

Cameras are minimal to 2D info put together with laptop or computer eyesight algorithms to interpret 3D depth. The draw back to this strategy is that it necessitates a computational system compared to acquiring exact 3D depth measurement from a LiDAR sensor.

Nonetheless, LiDAR sensors are high priced the advantage of relying solely on cameras is that it will make Teslas much a lot more cost-effective.

LiDAR are not able to be the only sensor utilized in a car or truck since it can only construct a wireframe 3D ecosystem. With out cameras it wouldn’t be in a position to examine targeted visitors indications, targeted visitors lights or anything at all that will not have depth.

Autos with LiDAR also rely on camera details and fuse the two outputs of the two sensors with each other to develop a digital representation of the real environment.

Musk believes that self-driving autos must navigate the world in the exact way as human drivers. Considering that human beings use their eyes and brain to navigate 3-dimensional room, cars with cameras and more than enough computational energy ought to be equipped to achieve the exact detail.

“Individuals push with eyes and organic neural nets, so it can make sense that cameras and silicon neural nets are the only way to accomplish a generalized alternative to self-driving, ” claims Elon Musk.

Though other self-driving initiatives like Google’s Waymo have taken a LiDAR strategy, Tesla is outpacing the competitors employing eyesight-only, device understanding and the community outcome of more than 100,000 cars in the FSD Beta application.

Tesla’s eyesight-only technique has turn into wise adequate that introducing radar information offers the procedure a lot more details than it requires and disorients the FSD software.

LiDAR and radar may be handy in training the FSD software package, but Tesla would like to stay away from making use of many sensors with possibly conflicting perceptions that would overwhelm the method.

With Tesla’s current patent of a large resolution radar, and the latest described use of LiDAR, it is possible that Tesla’s Robotaxi will utilize radar and/or LiDAR in purchase to reach whole automation.

Tesla’s FSD software program could at some point be segmented into consumer and commercial self-driving vehicles with purchaser cars reaching conditional autonomy (L2/L3) utilizing eyesight-only and professional vehicles achieving total autonomy (L4/L5) with the aid of radar or most likely even LiDAR sensors.

Commercial robotaxis could even be multi-sensor (cameras, radar, LiDAR), costing much a lot more, when purchaser self-driving cars would be vision only and far more reasonably priced.

Only incredibly significant resolution radar is relevant

— Elon Musk (@elonmusk) February 5, 2022